After long silence, due sadly to a lack of conference activity… here is my prepared piece for the Early Middle English Society’s five-person roundtable on editing Oxford, Bodleian Library MS Laud Misc. 108, which the session chair read for me at the 44th International Medieval Congress, Kalamazoo, Michigan.

Editing Oxford, Bodleian Library MS Laud Misc. 108:

A Technology-Supported Perspective

Sharon apologizes for being unable to present her thoughts herself but has provided a short piece, written conveniently in the third person! These thoughts emphasize some considerations that arise from both Sharon’s medievalist training and her current occupation as digital publications manager for Berkeley’s Mark Twain Project.

The topic of collaborative humanities projects has been raised repeatedly, most often by digital humanists with one eye on what our colleagues in the sciences have come to consider their primary modus operandi for publishable work. Collaboration is a recurring topic of discussion on the Humanist listserv, founded and moderated by Willard McCarty; it surfaces at a variety of conferences and in casual conversation. Two weeks ago, Lisa Spiro, director of the Digital Media Center at Rice University’s Fondren Library, posted to her blog some preliminary results from comparing single and joint authorship of articles in two quarterly journals, American Literary History and Literary & Linguistic Computing. About two percent of ALH‘s articles, or an average of one per year, had two authors; forty-eight percent of LLC‘s articles had two or more authors, and a majority of those authors spanned multiple institutions. Both Speculum‘s online index and Sharon’s personal collection of issues are incomplete, but for the same years Spiro examined, 2004 to 2008, in Review of English Studies we find five jointly authored articles—about the same ratio as for ALH—while Chaucer Review has zero. Monographs in our field share similar percentages, as do editions of texts; rare recent exceptions to the latter include the re-edition of Klaeber’s Beowulf by R. D. Fulk, Robert E. Bjork, and John D. Niles, and several teaching editions published by the TEAMS imprint.

The most obvious bar to collaboration in the humanities is the valorization of a unique, individual perspective on certain difficult analytical problems. This valorization has a pragmatic component: if you see compelling readings that no one has noticed, you probably don’t need or want a co-writer in preparing your article for publication. Preparing a critical edition of an entire manuscript of 238 folios (or 476 pages) is, obviously, a different proposition simply in terms of scope, and as others have discussed today, it is exactly the sort of mid-sized project that would benefit from multiple editors and pooled expertise. Given that review committees often continue to wish that individual professors publish individual work, it should still be possible to generate individually owned articles, but with a small team of editors, it will also be possible to create editions with better balance and greater speed than one or two scholars working alone could manage.

Dare one assert that a team of editors will enable peer learning and teaching in ways that individual scholarship does not invite? One bar to humanities innovation currently under scrutiny is the gap between what a scholar would like to produce, on one hand, and on the other, the knowledge of tools that would enable that production. Large-scale collaborations amongst multiple institutions, such as HASTAC, INKE, and Project Bamboo, are exploring ways to narrow that gap. Johanna Drucker’s recent opinion piece for the Chronicle of Higher Education, titled “Blind Spots,” rightly chastises faculty for hoping that library and technical professionals will take care of the hard, practical aspects of designing digital tools that support scholarship. Others have attempted—so far without much success—to convince faculty that the so-called millennial generation will not learn technology for them and save them the trouble. It will not do to be a lone researcher who hires grad-student talent or leans upon library staff to handle the text encoding, the database design, the project planning and adjustments in scope, the transformations to readable output, and the research into new tools that might smooth a final task. Each of these component parts involves intellectual responsibility, just as keenly as choosing whether to emend a line for semantic and metrical reasons, or deciding how much depth to allot to a text’s annotation. If one wants to take credit for a project’s final form, one must also be able to take responsibility for the decisions that lead there. At the same time, because it is functionally impossible to juggle all of these aspects gracefully or to learn each of them with equal depth, we come back to the concept of a collaboration of peers with complementary expertise….

For those who can juggle a little, a rich array of tools exists now from which to choose, many of them free and open-source, thanks to efforts wholly unrelated to textual editing and centered instead upon software development. One challenge is indeed to benefit “the right amount” from what other sectors have developed to support collaboration, distributed projects, and project planning, without becoming lost in absurd details we don’t need to worry about or spending time learning skills and tools that don’t actually pertain. It is helpful, regardless, to be able to draw from nearby models. For the Early Middle English Society’s incipient project, our preliminary tools include a wiki where we can share resources and record decisions, shared documents at Zoho, a listserv for discussion, and server space for lodging data files–essentially the low-budget version of what a distributed software project might use. As we dig in and establish deadlines, we might pick up the free version of 37signals’s Basecamp in order to have a central location for milestone deadlines and reminders. To date, only the server space has required a fee (which Sharon pays out of pocket).

To pick up an issue from Scott’s piece: what would be needed from a technical standpoint to produce both student and scholarly editions of Laud 108 under a collaborative aegis? Most important, after the editing team, is a framework for recording editorial decisions and conveying the result to readers—something that contemporary tools and processes have been shifting more firmly onto textual editors, away from publishers.

Five, ten, even fifteen years ago, scholars who edit texts were asked to consider adopting structured markup and to use the guidelines of the Text Encoding Initiative (TEI), because it was “the right thing to do.” Because TEI was an application of SGML, then of XML, TEI-encoded files could maintain a useful separation between descriptive markup and prescriptive output; that is, it’s the distinction between this is a rubric or this is a catchword, and format all catchwords flush right, two picas smaller than the main text. Now TEI has become a tacit standard, with a lively community that supports new and experienced practitioners alike with documentation, listservs, working groups, a wiki, and a long list of successful projects from which to glean inspiration. Despite its steep learning curve, TEI remains prominent because it precludes complete reinvention of the wheel in terms of encoding a manuscript’s physical description and history, evidence of damage or prior hands’ intervention, and so on.

For a collaboration, TEI has the great additional advantage of facilitating self-documentation: notes to fellow editors, notes about manuscript damage, and notes intended to be published as annotation can coexist readily in the working files. Better, those temporary notes to colleagues can be vanished en masse, via an automated script, prior to publication. Contrast similar expressions in a standard word-processing application or a shared environment like Google Docs, where multiple types of notes would want multiple colors or font changes and would lead to thickly illegible quasi-“marginalia,” which can be hard to understand in situ and are terrible to eradicate prior to setting up camera-ready copy.

Part of TEI’s learning curve, or indeed that of any output-independent framework, is that the editors must shape the encoded text’s anticipated output. The process of planning the forms that output will take begins ideally before editing and encoding do. In a perfect world, there would be time enough to encode every important aspect of a manuscript text with absolute consistency; in a world with deadlines and the will to produce a useful edition that can actually be completed, the extent of encoding depends somewhat upon the textual aspects that the published edition will need to support—or editions, plural: scholarly and student-oriented.

If we wanted primarily to create a critical edition of Laud 108’s texts, we’d record all emendations, scribal alterations, changes of hand, marginalia, and decoration, as well as collate substantive variant readings from other manuscripts. If we wanted primarily to create a reading text for students and had no interest in a full critical edition, we might feel less compelled to record every single substantive, and our annotation would skew differently, towards basic linguistic and historical help. We might show some apparatus but format it differently; considering the strengths of a digital preparation, we’d probably feel obligated to prepare not only a glossary but a complete linguistic concordance of word forms as they appear in Laud 108. On the other hand, if we mean from the outset to create both types of edited text from a single set of encoded files, we can record textual apparatus and commentary as well as all desired annotation in the same master fileset, then switch types of editorial content on and off in the critical edition and the student edition. Similarly, if we decided to expand our ambitions to metrical encoding for a subsequent “release,” and if that work revealed some changes to textual commentary or prompted further annotation, we wouldn’t have to pull a Will Langland and choose between prior versions as the basis for the metrical enhancement; we could add our improvements to the master fileset, flag them as revisions specific to the metrical iteration, and still retain the ability to output a student edition. This flexibility might sound simplistic, but compared to what transpires when books are set up in Quark Xpress or Adobe InDesign and an editor wants to enter changes prior to a reprint run, it’s amazingly liberating.

Sharon has mocked up a sequence of examples to illustrate having a single encoded file from which it’d be possible to express or export only what’s needed for a given display context. Because it’s more difficult than it might seem to produce a compelling mockup of publication-quality layout for display in a Web browser, these humble examples move from transcription to a hypothetical display that editors might use to check their work prior to adding annotation.

Let’s take a quick look at slide two…

…which was produced by scanning a microfilm frame at 800 dots per inch, then cropping and resizing the resulting TIFF file. Laud 108 presents text in two columns, so its verse is given in short lines; this is the upper left corner of folio 221 verso, part of King Horn, where Rymenhild scolds Athelbrus for pretending to be Horn, calls him a thief, and bids him leave her chamber.

Slide three shows a screen shot of what is, for Sharon’s purposes, the simplest possible encoding of a transcription from that segment of Laud 108.

The greenish text Sharon has circled is Unicode, to record “thorn,” long “s,” and dotted “y”; though abbreviations are expanded, the mark that indicates their presence is recorded, and two glyph forms of round “r” are recorded as well.

The next slide was created by scanning the printed facsimile edition of Harley 2253, published by the Early English Text Society in 1965.

Like Laud 108, Harley 2253 is an anthology containing saints’ lives as well as other texts. Laud, Harley, and Cambridge University Library Gg.4.27 contain the three extant texts of King Horn, and the Harley text derives from the same branch of transmission as Laud, whereas the Cambridge text is relatively distinct. In Harley 2253, as you can see, King Horn is given in long lines, with hairlines marking the ends of line segments.

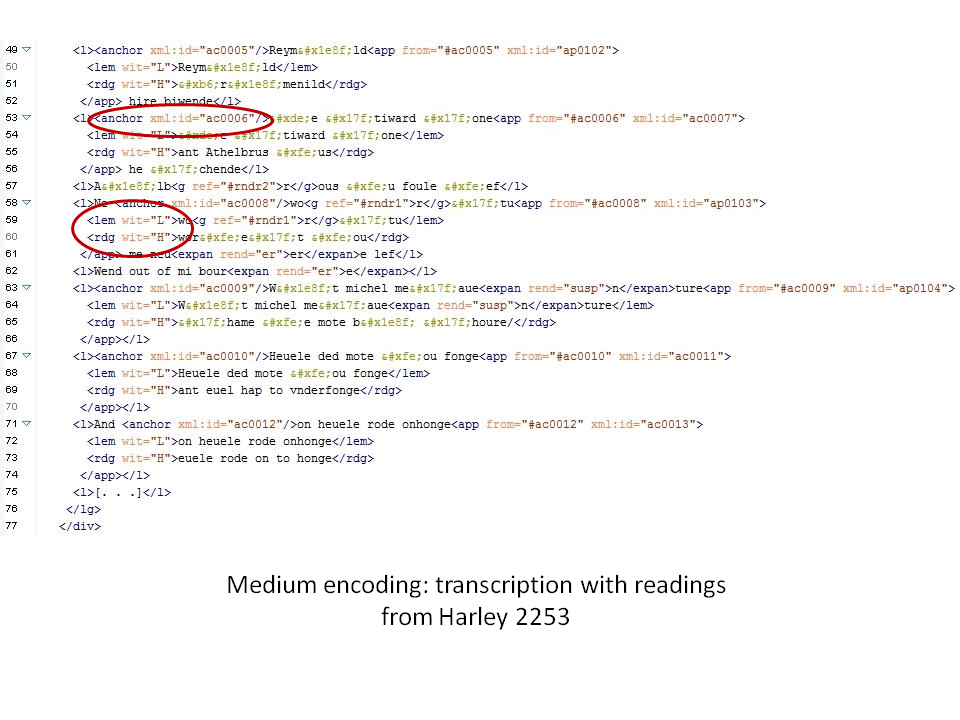

Slide five shows an expanded version of our earlier transcription.

In addition to everything that was recorded previously, this version includes readings from Harley wherever Laud and Harley differ substantively. At this point, you might notice, we have more XML than transcription. The XML anchors—one is circled—mark where a variant reading begins, and “app” entries containing “lemma” and “reading” information encode the variants themselves. It would be possible just to use “app,” as the TEI Guidelines suggest in chapter twelve, but Sharon’s experience with usability testing suggests that readers prefer to know where an apparatus entry begins, not only where it ends.

Sharon’s next slide is easier to read:

…this is just one way that all those pointy XML tags could be rendered in HTML, for display in a standard Web browser. It looks rather like a friendly translation of the basic transcription, in fact, but it derives from the Laud-plus-Harley version in slide five, ignoring most of the encoding that that slide showed.

The final slide is merely one quick choice for expressing the Laud and Harley readings literally alongside each other, for example to aid in proofreading the transcriptions before imposing editorial decisions upon them.

This version extracts all significant information from the Laud-plus-Harley encoding of slide five, and dumps it to HTML with colored brackets. One might conceivably choose to emend Laud in favor of Harley, which would involve swapping “lemma” and “reading” tags in the XML; if that took place, it’d be easy to regenerate the HTML dump and see purple in place of blue on the corresponding line. That ease is essentially why an editor might go to the trouble of using these tools: after the XML has gained editorially supplied punctuation, capitalization, and choices of reading for the final published text, it is equally straightforward—relatively speaking—to generate HTML that conveys those decisions gracefully to the reader.

Sharon has talked a bit about the advantages of collaboration from a technologically informed perspective; chief amongst these is the pooling of expertise amongst peers to achieve a richer edition than a lone scholar could endeavor to produce. For any edition that exists digitally, of course, there are further issues for which we have no time for a deliberate overview, including Web delivery options, strategies for building solid browse and search functionality, and the important concerns of preservation and sustainability. This is not the place to debate XTF versus Philologic, for better or worse…. Sharon hopes that what’s been shared here is enough to help spur discussion. Thank you.